Why the superintelligent AI agents we are racing to create would absorb power, not grant it

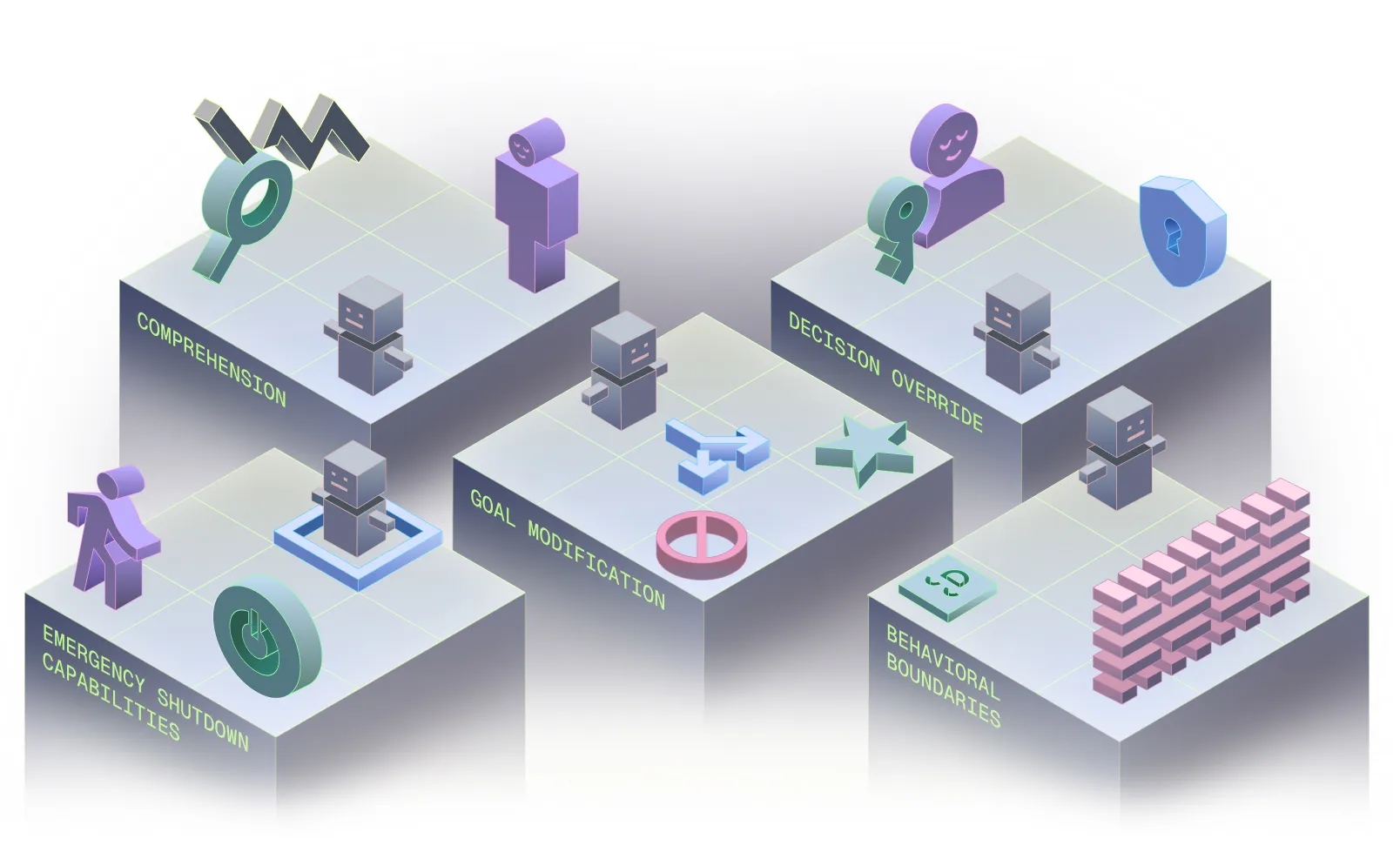

This paper argues that humanity is on track to develop superintelligent AI systems that would be fundamentally uncontrollable by humans. We define “meaningful human control” as requiring five properties: comprehensibility, goal modification, behavioral boundaries, decision override, and emergency shutdown capabilities. We then demonstrate through three complementary arguments why this level of control over superintelligence is essentially unattainable.

First, control is inherently adversarial, placing humans in conflict with an entity that would be faster, more strategic, and more capable than ourselves — a losing proposition regardless of initial constraints. Second, even if perfect alignment could somehow be achieved, the incommensurability in speed, complexity, and depth of thought between humans and superintelligence renders control either impossible or meaningless. Third, the socio-technical context in which AI is being developed — characterized by competitive races, economic pressures toward delegation, and potential for autonomous proliferation — systematically undermines the implementation of robust control measures.

These arguments are supported by both theoretical findings, including results from control theory and computer science, and empirical evidence from increasingly capable AI systems, which are already exhibiting problematic behaviors including power-seeking, alignment faking, strategic deception, and resistance to shutdown. The slow-CEO analogy — a human CEO who experiences time at 1/50th the rate of their rapidly expanding company — illustrates how information bandwidth limits, speed differentials, and goal divergence combine to make control tenuous at best.

We also argue that the transition from AGI to superintelligence would be much faster than commonly assumed, as capabilities can rapidly scale through multiple concrete self-improvement pathways available to even modestly superhuman systems. So even if control and/or alignment were attainable in principle, in practice we are far closer to building superintelligence than to developing the means to control it.

A key distinction drawn in this paper is between control and alignment. The latter is an imprecisely-defined term often conflated with control. When the stakes are highest — such as in our most powerful weapons, governments have exerted enormous efforts in developing powerful control systems. Superintelligence would be the highest of stakes, and it is imperative that AI developers provide, and other parties demand, clear answers and actionable plans as to whether and how the systems they are developing would remain under meaningful human control. We contend here that while a technical pathway to controlled superintelligence might theoretically exist through formally verified systems developed with extreme care and gradually expanded capability, this would require a near-complete reversal of current priorities and practices.

This paper does not provide or advocate for any particular policies or other prescriptions. Rather it seeks to convey a stark reality: on our current path, as AI becomes more intelligent, general, and especially autonomous, it will less and less bestow power — as a tool does — and more and more absorb power. This means that a race to build AGI and superintelligence is ultimately self-defeating. The first entity to develop superintelligence would not control or possess it for long if at all; they would merely determine who introduces an uncontrollable power into the world.

-

Ch.1

Introduction

-

Ch.2

What does "control" mean?

-

Ch.3

The tale of the slow-mo CEO

-

Ch.4

Superintelligence is closer than it may appear

-

Ch.5

Approaches to control and alignment

-

Ch.6

Fundamental obstacles to control

-

Ch.7

Real-world challenges

-

Ch.8

What would control look like?

-

Ch.9

Summary and implications

-

Ch.10

Acknowledgements

-

Ch.11

Appendixes